Setting up Apache Spark

Table of contents

In this blog, I will be focusing on setting up the workspace for Windows so that we can get started with Apache Spark and do some hands-on in my upcoming series of Apache Kafka. If you haven't taken a look at it and wish to, here is the link https://renjithak.hashnode.dev/demystifying-big-data-analytics-with-apache-spark-part-1

Now, Most of you would be aware of the JDK, Eclipse and Gradle setup, If yes just skip the first few parts and go to the SPARK part of the blog. Here I will be using Spark-3.x and OpenJDK 11, there is a list of compatibility versions for Spark and Java which is mentioned below, you can find more details about it on Apache Spark official documentation: https://spark.apache.org/docs/3.3.2/, If you are using a different version of JDK, install the corresponding compatible spark version.

Spark 3.x: Java 8/11/12/13/14/15

Spark 2.4.x: Java 8/9/10/11

Spark 2.3.x: Java 7/8/9

Spark 2.2.x: Java 7/8

Spark 2.1.x: Java 7/8

Spark 2.0.x: Java 7/8

Now let's begin the Installation Steps,

JDK

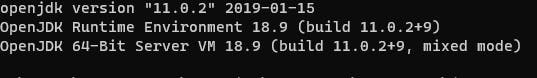

Go to https://jdk.java.net/archive/ and select the Windows zip file for build 11.0.2 download and unzip it.

Next, we need to configure the path of Java, Go to -> Control panel-> System-> Advanced Settings-> Environment variables and in users click on Add new and set the JAVA_HOME variable as below for your location.

To test the successful installation open the command prompt and type the command java -version this will give a result like below

Gradle

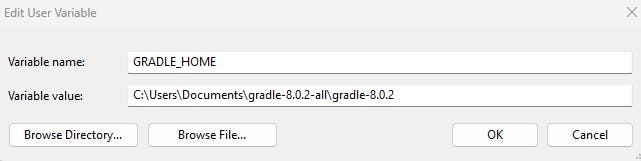

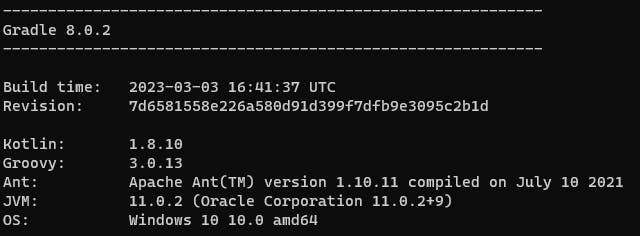

Go to https://gradle.org/install/, Move to the Install manually section, click and download from the complete distribution and set the GRADLE_HOME as below.

To test the successful installation open the command prompt and type the command gradle -v this will give a result like below

Eclipse

Note: You can use any IDE of your choice

To install Eclipse go to https://www.eclipse.org/downloads/packages/release/2023-03/r select the Eclipse IDE for Java Developers Windows zip file, and download and unzip it. Try running the application from the .exe file in the eclipse folder.

Apache Spark

Go to the official website spark.apache.org/downloads.html, select the release and package type as shown below, download and unzip using WinRar, WinZip or 7-zip.

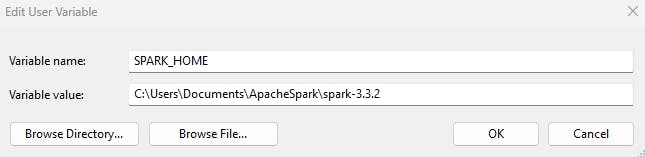

Now set the environment variable SPARK_HOME as below (Go to -> Control panel-> System-> Advanced Settings-> Environment variables)

A piece of Hadoop is required to run the Spark, For your ease Go to the following link and download the winutils.exe https://github.com/steveloughran/winutils/blob/master/hadoop-3.0.0/bin/winutils.exe

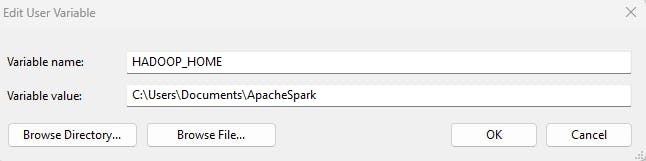

Setup the HADOOP_HOME as below in whichever location you have added the winutils

PATH Variables

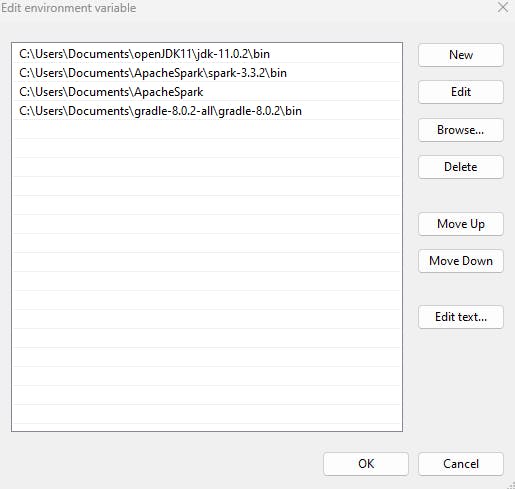

Ensure to Add the SPARK_HOME, HADOOP_HOME, JAVA_HOME and GRADLE_HOME paths by editing the PATH variable in the environment variables section as below

Testing Apache Spark

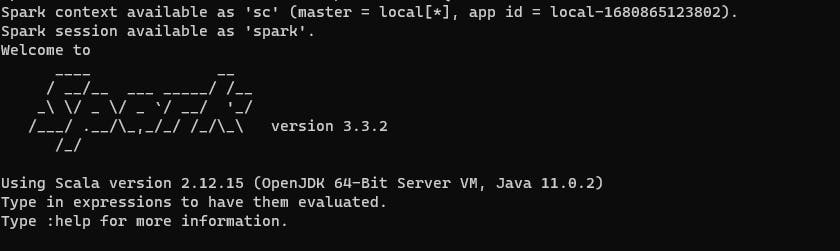

Open the command prompt and type in the command spark-shell you should be able to see the following result

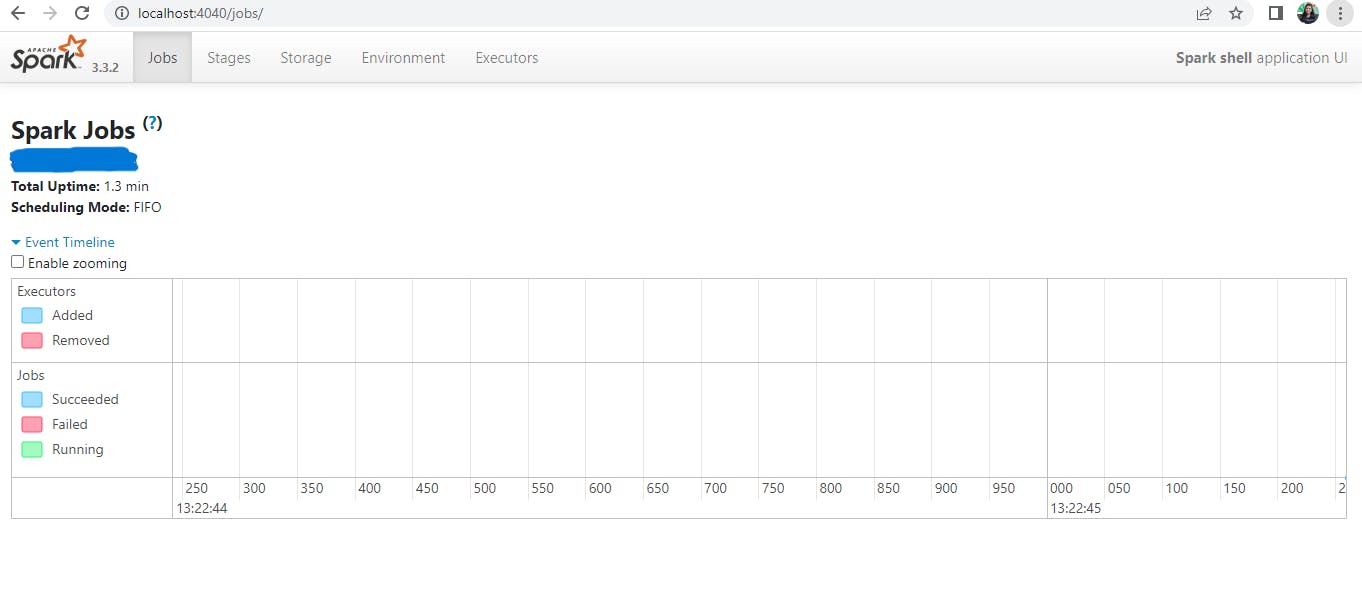

Also, verify if the spark is hosted in your localhost port no:4040 http://localhost:4040/ similar to below

Great, you now have a working setup for Apache Spark!! :)